Secrets Leakage

In the context of LLMs (Large Language Models) and AI, a "Secrets Leakage Guardrail" refers to a security mechanism designed to prevent AI models from exposing sensitive or confidential information. This can include API keys, passwords, proprietary business data, or personally identifiable information (PII).

How It Works:

A secrets leakage guardrail typically operates in one or more of the following ways:

-

Input Filtering

- Prevents users from accidentally submitting sensitive data into an AI model (e.g., blocking prompts that contain API keys or passwords).

-

Output Scrubbing

- Detects and removes secrets before they are returned to the user (e.g., scanning AI-generated responses for patterns resembling sensitive data).

-

Monitoring & Logging

- Uses security tools to track and flag unusual AI behavior that could indicate a leakage risk.

-

Fine-Tuning & Model Constraints

- Ensures that LLMs are not trained on sensitive information and that they do not retain memory of private data across sessions.

Why It Matters:

AI models, especially LLMs like ChatGPT, are designed to generate human-like text based on training data and prompts. If they memorize sensitive information from training data or inadvertently echo private data provided by users, it can lead to security risks, such as:

- Credential leaks (e.g., exposing API keys or passwords)

- Data breaches (e.g., revealing proprietary corporate strategies)

- Compliance violations (e.g., GDPR, HIPAA issues)

Example:

Without a guardrail:

User: "Hey AI, can you show me an example API key for OpenAI?"

AI: "Sure! Here's an example: sk-1234567890abcdef" (🚨 Security Risk!)

With a guardrail:

User: "Hey AI, can you show me an example API key for OpenAI?"

AI: "I'm sorry, but I can't provide that information for security reasons." (✅ Safe Response)

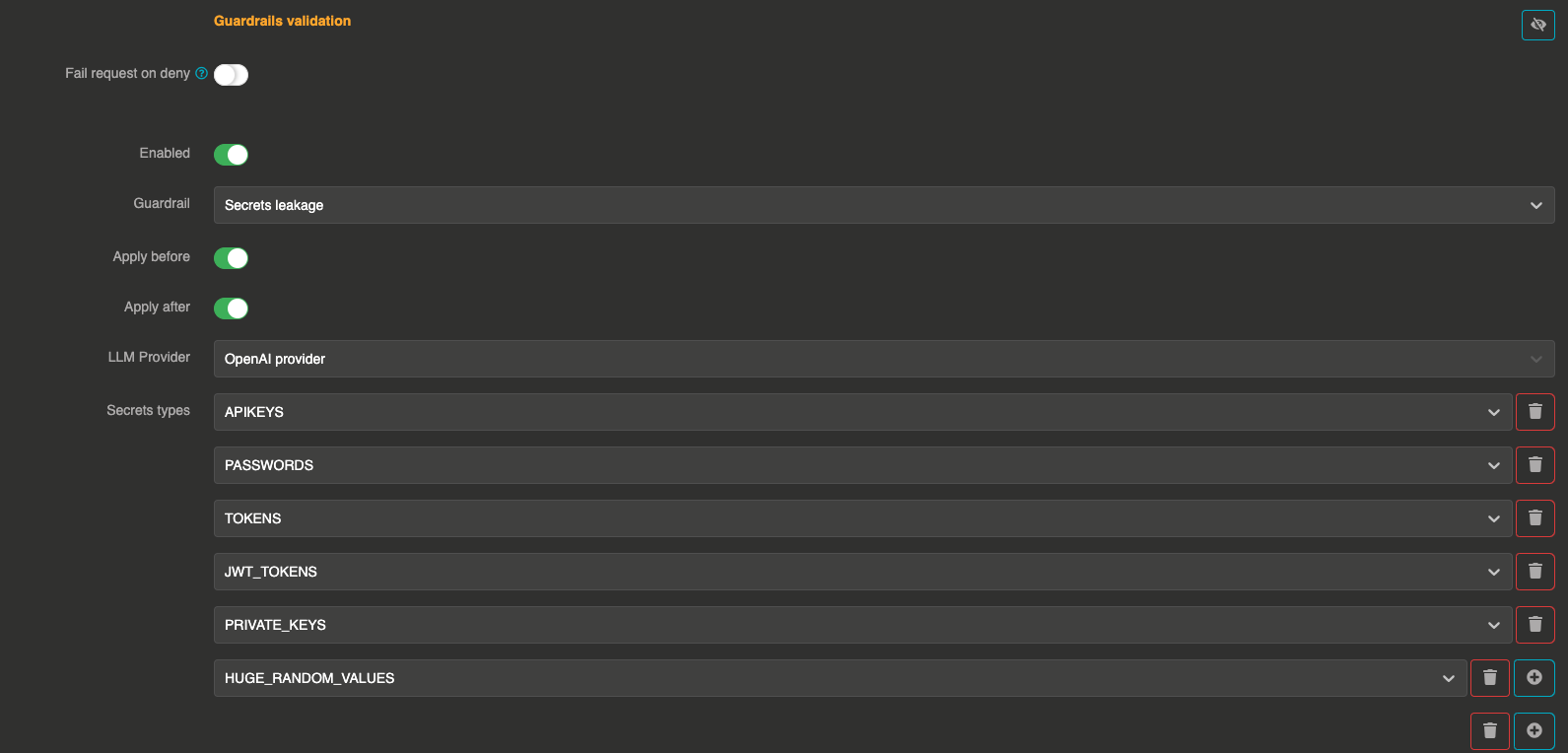

Configuration

"guardrails": [

{

"enabled": true,

"before": true,

"after": true,

"id": "secrets_leakage",

"config": {

"provider": "provider_480ec0b7-bc9e-487f-8376-b9b8111bfe5e",

"secrets_leakage_items": [

"APIKEYS",

"PASSWORDS",

"TOKENS",

"JWT_TOKENS",

"PRIVATE_KEYS",

"HUGE_RANDOM_VALUES"

]

}

}

]