👀 Expose your LLM Provider

now that your provider is fully setup, you can expose it to your organization. The idea here is to do it through an Otoroshi route

with a plugin of type backend that will handle passing incoming request to the actual LLM provider.

OpenAI compatible plugins

we provide a set of plugin capable of exposing any supported LLM provider through a compatible OpenAI API. This is the official way of doing LLM exposition.

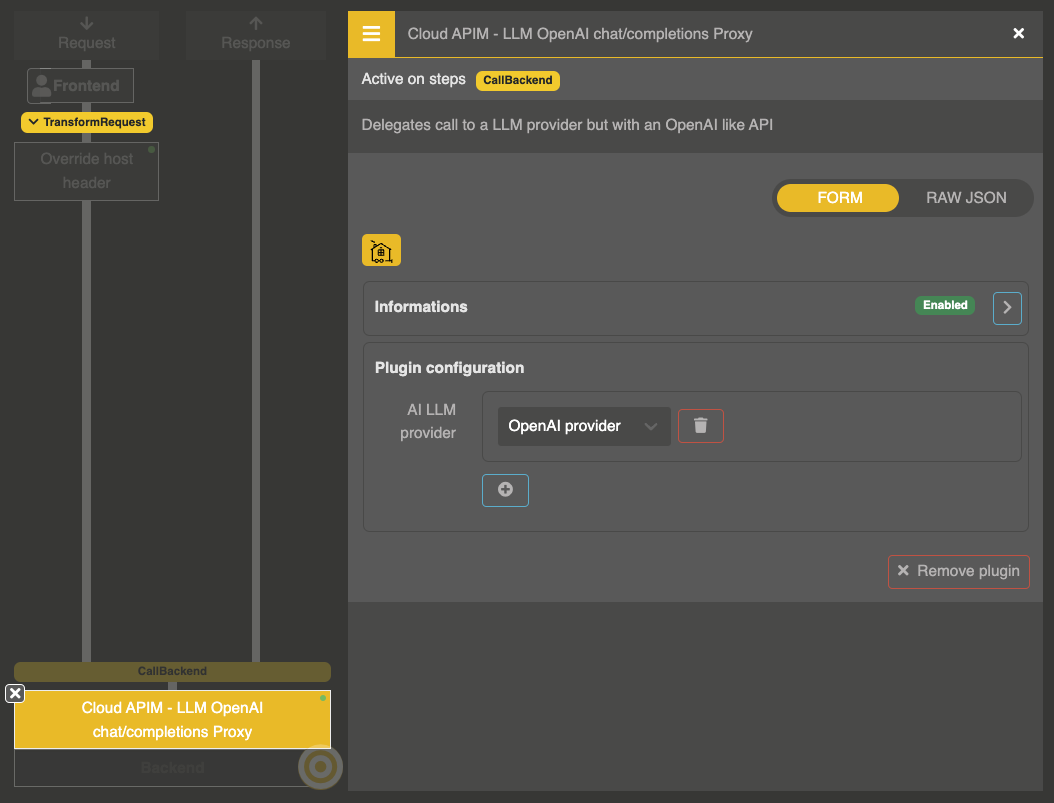

LLM OpenAI compatible chat/completions proxy

this plugins is compatible with the OpenAI chat completion API, also in streaming style

you can directly call it this way:

curl https://my-own-llm-endpoint.on.otoroshi/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OTOROSHI_API_KEY" \

-d '{

"model": "gpt-4o",

"messages": [

{

"role": "user",

"content": "Hello how are you!"

}

]

}'

with a response looking like

{

"id": "chatcmpl-B9MBs8CjcvOU2jLn4n570S5qMJKcT",

"object": "chat.completion",

"created": 1741569952,

"model": "gpt-4o",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Hello! How can I assist you today?",

"refusal": null,

"annotations": []

},

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 19,

"completion_tokens": 10,

"total_tokens": 29

}

}

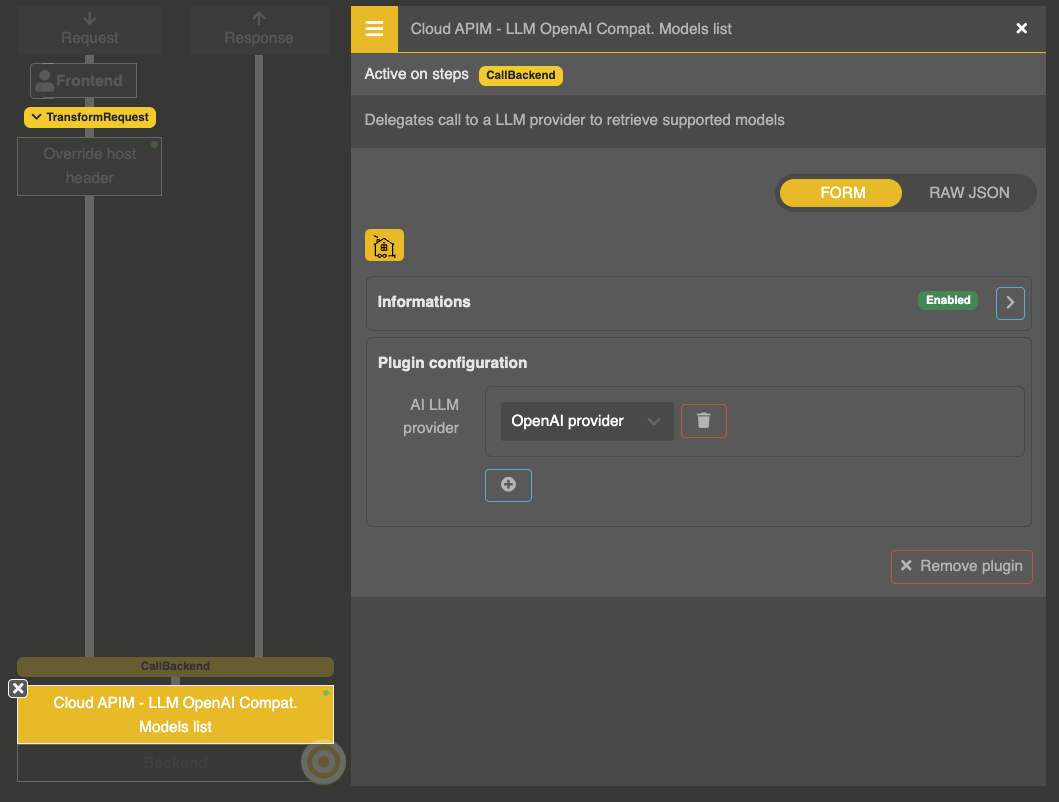

LLM OpenAI compatible models list

there is also a plugin to expose the provider models list compatible with the OpenAI API

you can directly call it this way:

curl https://my-own-llm-endpoint.on.otoroshi/v1/models \

-H "Authorization: Bearer $OTOROSHI_API_KEY"

with a response looking like

{

"object": "list",

"data": [

{

"id": "o1-mini",

"object": "model",

"created": 1686935002,

"owned_by": "openai"

},

{

"id": "gpt-4",

"object": "model",

"created": 1686935002,

"owned_by": "openai"

}

]

}