Wasm Functions

🤖 What Are Tool Calls in LLMs?

Tool calls in Large Language Models (LLMs) allow the model to interact with external tools, APIs, or functions to fetch real-world data or perform tasks beyond its native capabilities.

Instead of relying solely on pre-trained knowledge, LLMs can dynamically query functions like get_flight_times to provide accurate, real-time information.

🔹 How Do Tool Calls Work?

When an LLM identifies a request that matches a tool’s function, it formats the request into a structured call (like an API request) and processes the response before delivering it to the user.

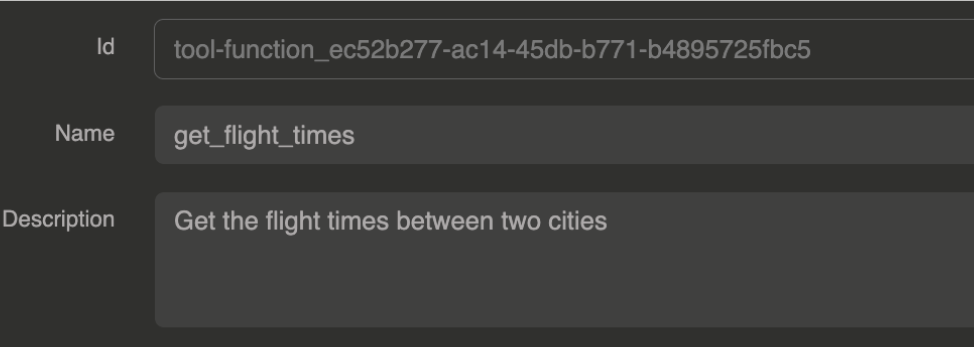

✈️ What is get_flight_times?

The get_flight_times function is a tool call that helps you retrieve flight durations between two locations. By providing a departure and arrival destination, you can get accurate estimates of how long your flight will take.

🛠️ How Does it Work?

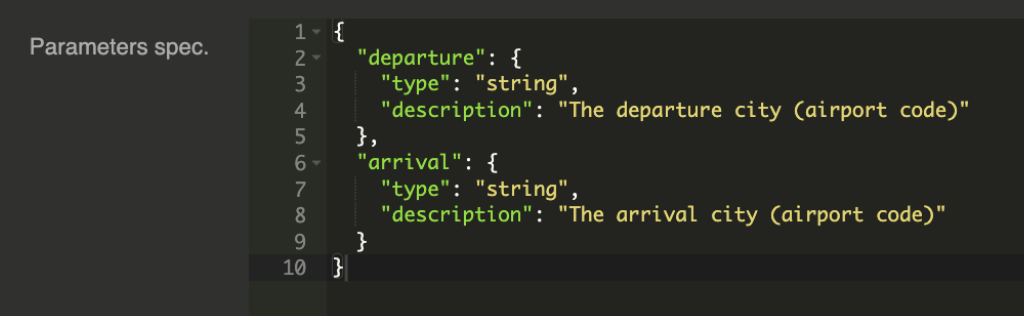

The function operates by taking in two key parameters:

- departure 🛫 – The airport or city where your flight begins.

- arrival 🛬 �– The airport or city where your flight ends.

Once you provide these details, get_flight_times fetches the estimated duration of direct and indirect flights between the specified locations. The function considers factors like average flight speeds, distances between airports, and typical air traffic conditions.

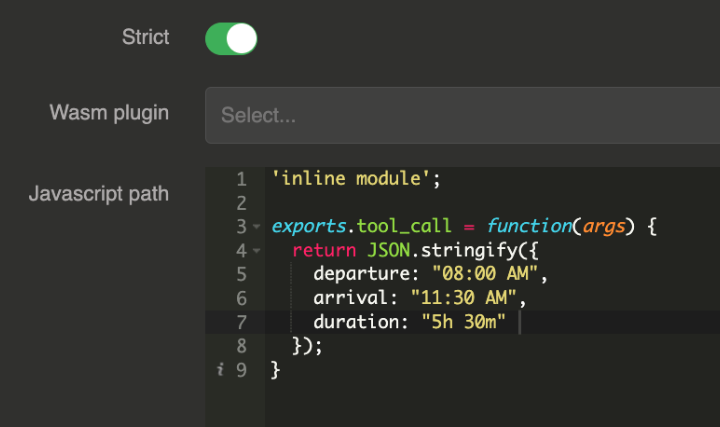

📝 Example Usage

Imagine you want to check the flight duration from New York (JFK) to Los Angeles (LAX):

get_flight_times(departure="JFK", arrival="LAX")

The function will return the estimated flight duration, such as 5h 30m for a direct flight or longer if layovers are involved.

Next flight function example

In this example, we will create a tool call function named next_flight that will take two IATA codes (airport codes like CDG for Paris Charles-De-Gaulle) and will return the flight number and when the flight will take off from now.

Function configuration

'inline module';

exports.tool_call = function(args) {

const formattedArguments = JSON.parse(args);

return JSON.stringify({

nextflight: `flight ${parseInt(Math.random() * 10000)} from ${formattedArguments["departure"]} to ${formattedArguments["arrival"]} is taking off in ${parseInt(Math.random() * 10)} hours`

})

}

Function parameters

{

"departure": {

"type": "string",

"description": "The departure airport (IATA airport Code)"

},

"arrival": {

"type": "string",

"description": "The arrival airport (IATA airport Code)"

}

}

Request to our endpoint

curl --request POST \

--url http://wasm-tool-function.oto.tools:8080/ \

--header 'content-type: application/json' \

--data '{

"messages": [

{

"role": "user",

"content": "Could you give me the next flight from CDG to DXB please ?"

}

]

}'

LLM Response with our tool function next_flight

{

"id": "chatcmpl-FzOsLwlxF7cgTkpBfSPfhYEEvw53X274",

"object": "chat.completion",

"created": 1743519776,

"model": "gpt-4o-mini",

"system_fingerprint": "fp-Jz72gmHTIAQw2xYamDRy3Q8kMSPUsMb0",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "The next flight from CDG (Charles de Gaulle Airport) to DXB (Dubai International Airport) is flight 3755, which is taking off in 9 hours."

},

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 209,

"completion_tokens": 37,

"total_tokens": 246,

"completion_tokens_details": {

"reasoning_tokens": 0

}

}

}

Full entity configuration

{

"_loc": {

"tenant": "default",

"teams": [

"default"

]

},

"id": "tool-function_f6562221-1c0f-4f8e-aae6-279beb0144dd",

"name": "next_flight",

"description": "This function returns the next flight.",

"metadata": {},

"tags": [],

"strict": true,

"parameters": {

"departure": {

"type": "string",

"description": "The departure airport (IATA airport Code)"

},

"arrival": {

"type": "string",

"description": "The arrival airport (IATA airport Code)"

}

},

"required": null,

"backend": {

"kind": "QuickJs",

"options": {

"jsPath": "'inline module';\n\nexports.tool_call = function(args) {\n const formattedArguments = JSON.parse(args);\n return JSON.stringify({\n nextflight: `flight ${parseInt(Math.random() * 10000)} from ${formattedArguments[\"departure\"]} to ${formattedArguments[\"arrival\"]} is taking off in ${parseInt(Math.random() * 10)} hours`\n })\n}"

}

},

"kind": "ai-gateway.extensions.cloud-apim.com/ToolFunction"

}

Provider configuration

{

"_loc": {

"tenant": "default",

"teams": [

"default"

]

},

"id": "provider_6699f127-34d1-4fef-b3ad-6bcf592ed9c6",

"name": "OpenAI provider + tool wasm function",

"description": "An OpenAI LLM api provider",

"metadata": {},

"tags": [],

"provider": "openai",

"connection": {

"base_url": "https://api.openai.com/v1",

"token": "${vault://local/openai-token}",

"timeout": 30000

},

"options": {

"model": "gpt-4o-mini",

"frequency_penalty": null,

"logit_bias": null,

"logprobs": null,

"top_logprobs": null,

"max_tokens": null,

"n": 1,

"presence_penalty": null,

"response_format": null,

"seed": null,

"stop": null,

"stream": false,

"temperature": 1,

"top_p": 1,

"tools": null,

"tool_choice": null,

"user": null,

"wasm_tools": [

"tool-function_f6562221-1c0f-4f8e-aae6-279beb0144dd"

],

"mcp_connectors": [],

"allow_config_override": true

},

"provider_fallback": null,

"context": {

"default": null,

"contexts": []

},

"models": {

"include": [],

"exclude": []

},

"guardrails": [],

"guardrails_fail_on_deny": false,

"cache": {

"strategy": "none",

"ttl": 300000,

"score": 0.8

},

"kind": "ai-gateway.extensions.cloud-apim.com/Provider"

}

Endpoint configuration

{

"_loc": {

"tenant": "default",

"teams": [

"default"

]

},

"id": "route_52b9e4b69-d161-4e26-9a32-4bcc4c25e2db",

"name": "wasm tool function example",

"description": "wasm tool function example",

"tags": [],

"metadata": {},

"enabled": true,

"debug_flow": false,

"export_reporting": false,

"capture": false,

"groups": [

"default"

],

"bound_listeners": [],

"frontend": {

"domains": [

"wasm-tool-function.oto.tools"

],

"strip_path": true,

"exact": false,

"headers": {},

"query": {},

"methods": []

},

"backend": {

"targets": [

{

"id": "target_1",

"hostname": "request.otoroshi.io",

"port": 443,

"tls": true,

"weight": 1,

"predicate": {

"type": "AlwaysMatch"

},

"protocol": "HTTP/1.1",

"ip_address": null,

"tls_config": {

"certs": [],

"trusted_certs": [],

"enabled": false,

"loose": false,

"trust_all": false

}

}

],

"root": "/",

"rewrite": false,

"load_balancing": {

"type": "RoundRobin"

},

"client": {

"retries": 1,

"max_errors": 20,

"retry_initial_delay": 50,

"backoff_factor": 2,

"call_timeout": 30000,

"call_and_stream_timeout": 120000,

"connection_timeout": 10000,

"idle_timeout": 60000,

"global_timeout": 30000,

"sample_interval": 2000,

"proxy": {},

"custom_timeouts": [],

"cache_connection_settings": {

"enabled": false,

"queue_size": 2048

}

},

"health_check": {

"enabled": false,

"url": "",

"timeout": 5000,

"healthyStatuses": [],

"unhealthyStatuses": []

}

},

"backend_ref": null,

"plugins": [

{

"enabled": true,

"debug": false,

"plugin": "cp:otoroshi.next.plugins.OverrideHost",

"include": [],

"exclude": [],

"config": {},

"bound_listeners": [],

"plugin_index": {

"transform_request": 0

}

},

{

"enabled": true,

"debug": false,

"plugin": "cp:otoroshi_plugins.com.cloud.apim.otoroshi.extensions.aigateway.plugins.OpenAiCompatProxy",

"include": [],

"exclude": [],

"config": {

"refs": [

"provider_6699f127-34d1-4fef-b3ad-6bcf592ed9c6"

]

},

"bound_listeners": [],

"plugin_index": {}

}

],

"kind": "proxy.otoroshi.io/Route"

}